Image Retrieval: A Picture is worth 8 × 8 × 8 Words

Introduction

We tested and designed an image retrieval system that leverages traditional document similarity metrics during retrieval time. Our method first encodes input images into a 3D matrix of discrete tokens, and then computes k-grams in multiple dimensions. We then index images as a global Bag-of-Words (BoW) vector. We assessed the performance of our model on the GPR1200 dataset, and experimented with various similarity scoring methods for BoW. Using term frequency-inverse document frequency (tf-idf), we achieved a mean average precision (mAP) that is 6% worse than the comparable non-quantized method reported by the authors of GPR1200.

Background

A common strategy for information retrieval is to compare the frequencies of different terms between different documents. Search engines such as Tantivy and Lucene retrieve documents using an inverted term index. BoW representations fit naturally into these implementations, and as a result have been widely implemented in different image retrieval solutions [8].

Cao et al. [1] obtained image tokens using k-means clustering of the intermediate activation of a convolutional network. Other methods have been proposed that use end to end training of the token representations for image retrieval [4, 1, 2]. Despite training our token representations without much consideration of their downstream usage in for image retrieval, our method still obtains reasonable benchmark results.

Data Exploration

Data Sources

We fine tune our VQ-ResNet models using the ImageNet LSVRC 2012 Training Set. We then evaluate the accuracy of VQ-ResNet on the validation set, ImageNet LSVRC 2012 Validation Set. Both of these datasets were retrieved from Academic Torrents.

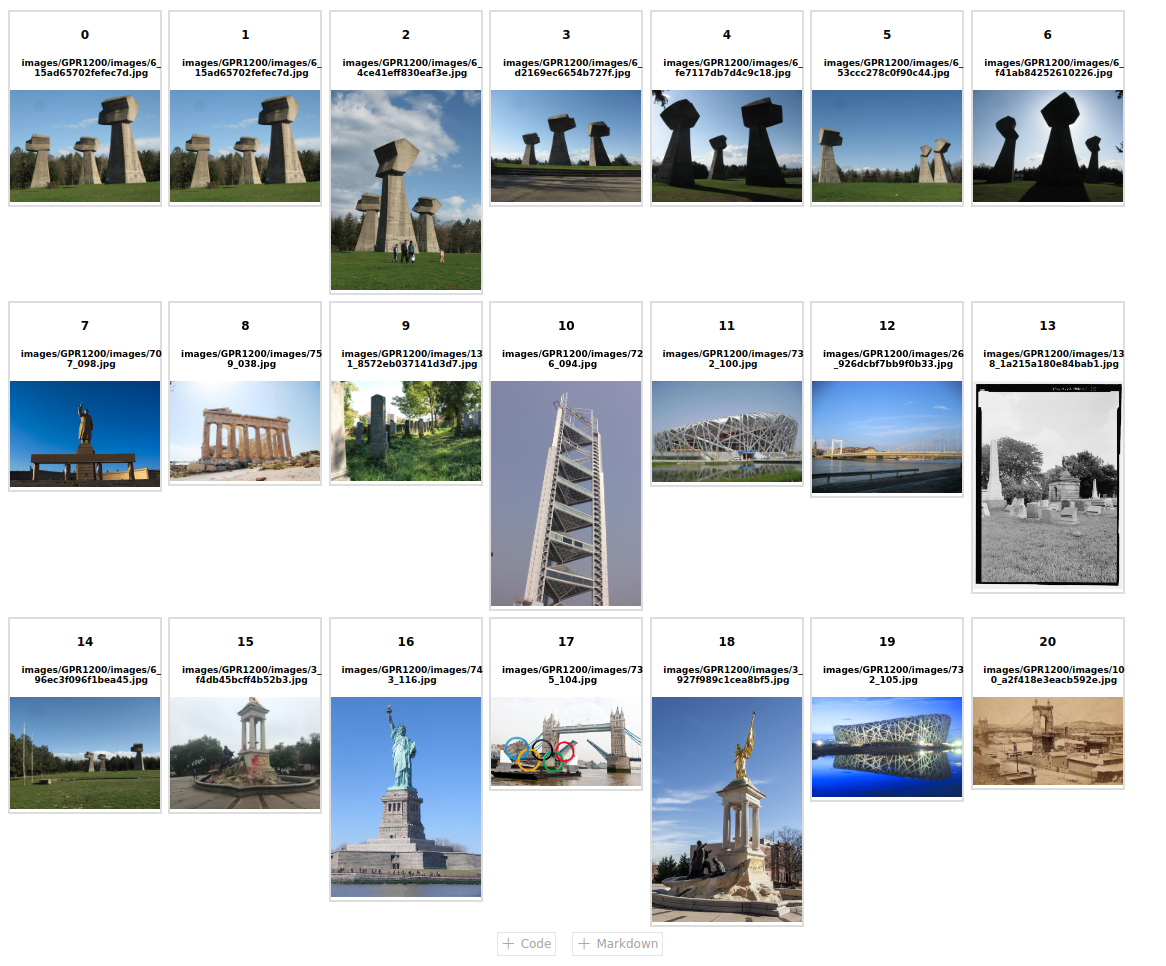

We evaluate image retrieval performance on the GPR1200 dataset [7]. This benchmark set contains a diverse collection of general-purpose images, and measures the ability of a model to retrieve images in similar categories such as objects, places, or people. The authors provided baseline scores for the embeddings of various image models, and their reported retrieval mAP (mean average presicion) using embeddings from a ResNet101, was 42.8.

In prior reports we were considering the FlickrFaces dataset and Oxford5k dataset. Both were ultimately dropped: The FlickrFaces dataset did not provide the nececssary image labels to conduct accurate image retrieval; and the Oxford5k dataset didn’t encompass a wide enough image category to prove the robustness of our methods. This led to selecting the GPR1200 dataset.

1 Methodology

Indexing Pipeline

Our unoptimized pipline takes about 60 seconds on a Nvidia 3070 GPU and Ryzen 3700 CPU to fully index the GPR1200 dataset. This is with an image resolution of 256, batch size of 64, and for k grams, k = 3 with no padding or stride, on our RN50 model.

In compute limited settings, such as on a 4-core laptop, the indexing time of GPR1200 is about 30 minutes, with most of the time being spent running image batches through the VQ-Resnet.

Our indexing pipeline is invariant to input image resolution. Our pipeline indexes any image of any input resolution, (as long as the embedding shape is larger than the k-grams kernel size). The result of the indexing pipeline is that all images are stored in memory as a BoW vector.

-

Batching images: We sort images by aspect ratio, and then group images into batches. The same aspect ratio is maintained across all images in a batch. Different batches of images have different spatial resolutions. Images in the same batch were slightly scaled to have the same resolution. The max of height/width of the image batches can be modified as a hyperparameter. This batching step parallelizes following pipeline operations.

-

VQ-ResNet Model: Image batches are passed through our VQ-ResNet model to obtain 3D arrays of tokens, or ”words,” that encode meaningful information about the contents of the images. The shape of these embeddings depended on the input batch’s spatial resolution, and the number of codebook heads.

-

Calculating kgrams: For each embedding, a window of size k×k×c, where c is the number of codebook heads, is slid across all possible positions over the batched embeddings. The value counts of different tokens within this window are stored, resulting in a tensor of shape (k by k by k by the number of possible tokens). This tensor is the Bag-of-Words for each image.

VQ-ResNet Model

ResNet is a deep learning model widely used in the computer vision task of image classification. The intermediate activation’s contain information about the types of objects that are present in an input image, which is useful for image retrieval, as images containing similar objects should ideally have similar intermediate activation’s within ResNet.

We took inspiration from learned vector quantization models [6], and obtain our token representation of images using a learned vector quantization layer. We modified a pretrained ResNet50 model from torchvision, placing a Vector Quantization (VQ) layer after the last convolutional layer. We froze the first three convolutional blocks of ResNet, and train the model for four epochs on the ImageNet LSVRC 2012 training set. Our best performing VQ-Resnet reached close to original accuracy@5 (within 5%) and accuracy@1 (within 3%) on the validation set.

It would have been interesting to compare the performance of a non-learned vs learned vector quantization layer. Our learned VQ layer did require training the weights of the original model. In particular, freezing all of the ResNet layers led to more inaccuracies on ImageNet, but overall, the validation accuracy of our best model has shown that the tokens do contain meaningful ”words” that describe the objects classes present in images.

In all of our experiments we use a multi-headed vector quantization layer [5]. For a single image, the resulting tokens from VQ-Resnet are shaped c×H ×W, where c is the number of VQ heads. The codebook IDs are integers from 0 to the number of codebooks, which we varied in our experiments.

From 3D Token Embeddings to BoW: Multidimensional K Grams

We obtain Bag-of-Words (BoW) representations of our discrete tokens by using K grams. This allows us to obtain a global vector representation of gram frequencies. We define a gram as all possible token values in a k by k window over the last two dimensions of an input embedding.

Our intuition is that given the fully convolutional architecture of the first four blocks of ResNet, the information in the intermediate activations is highly localized, meaning much of the spatial information in the intermediate activation’s of a convolutional network is kept to small sub-areas. Discarding long-range information in this activation should not have left out much detail about how the network interprets different activation’s, as convolutional networks usually have small kernels; at any given single layer, long-range information is not able to be interpreted or transmitted.

We extend the notion of K-grams into 2 dimensions; given a 3D input tensor of discrete integer class values, 2D K-grams returns the value counts of the tokens over all positions of a k by k window over the last two dimensions of the input tensor. Conceptually, a k by k window is slid across the input tensor, and then the counts of integer classes at each position in the window are stored. On an 8 by 8 by 8 input tensor, with integer class ids from 0 to 63, 2D 3-grams returns an 8 by 3 by 3 by 64 tensor containing the value counts of each class id at each position in the 33 window.

We also experiment with using 3D k grams. 3D K grams applies the kernel along the channel dimension, as well as the height and width of the embedded 3D tokens. This results in a much smaller total BoW size.

Using 2D k grams, the size of the BoW descriptive vector for a single image is equal to c⋅k ⋅k ⋅n, where c is the number of channels in the 3D tensor, and n - 1 is the max possible integer class id.

Using 3D k grams, the size of the BoW descriptive vector for a single image is equal to k ⋅ k ⋅ k ⋅ n.

Similarity Calculation: Evaluating Image Similarities Using Various Techniques

In this project, we compare the mAP scores obtained from different similarity scorings between image embeddings.

Jaccard Similarity:

The choice of using Jaccard Similarity and TF-IDF as similarity measures in this project is based on their widespread use and proven effectiveness in comparing sets and weighing the importance of tokens, respectively. The Jaccard similarity is calculated as the intersection of two sets divided by their union:

where A and B represent the sets being compared.

The Jaccard similarity is intuitive and easy to compute, though it may not be the best choice for our tokens. We experimented with cropping all dataset images to the same size, and then measuring similarity based on elementwise equality of the tokens. This produced lackluster scores compared to our BoW method, probably because it doesn’t take into account the frequency of different terms. The importance of different tokens being equal between two embeddings is likely not the same for all tokens.

TF-IDF:

The TF-IDF technique takes into account the frequency of tokens in a document and their rarity across a collection of documents. The TF-IDF weight for a token t in a document d is calculated as:

where TF(t,d) is the term frequency of token t in document d, and IDF(t) = log DFN(t) is the inverse document frequency of token t, with N being the total number of documents and DF(t) representing the number of documents containing the token t.

Similarity Measure Comparison and Selection:

We compare the performance of Jaccard Similarity and TF-IDF using the Mean Average Precision (mAP) evaluation metric. Our experiments indicate that the TF-IDF applied on our obtained BoW, outperforms Jaccard Similarity applied on the VQ-Resnet tokens.

Experiments and Results

Mean Average Precision: Calculation and Interpretation

The Mean Average Precision (mAP) metric is calculated by first determining the Average Precision (AP) for each query in the dataset. AP is computed as the average of the precision values obtained at each relevant item in the ranked retrieval results. We analyze the mAP values obtained for various parameter configurations and similarity measures on GPR1200.

Experiment Setup

mAP scores are measure on the GPR1200 dataset.

Explanation of model parameters:

-

Heads: Number of codebook attention heads [5]. All of our experiments used a shared codebook.

-

Codebook Dim: The z-dimension of the codebook. A linear layer projects each input vector of size Dim, to the codebook space.

-

Codebook Size: The number k, or the number of codebook vectors.

-

Commitment Weight: See equation 3 from Oord, Vinyals, and Kavukcuoglu [6]

-

Threshold EMA Dead Code: Randomly reinitializes codes that are not being selected by the network.

-

Dim: The input dimension, which is from the preceding ResNet Conv layer.

Explanation of k-grams parameters:

-

SimilarityMeasurement: Labels what methods was used to measure similarity, Jaccard or TF-IDF.

-

Model: Indicates which model was used from the model table prior to the similarity measurements.

-

Resolution: Indicates the size of the image space across various aspect ratios.

-

Kernel: Dimensions of the k-grams calculation (NAN means Jaccard similarity was used)

-

Padding: Dimensions of the k-grams calculation (NAN means Jaccard similarity was used)

-

should_channel_kgrams: Whether k grams is applied to the channel dimension. If this is true, then the k-grams window is applied to the channel dimension, or the ‘codebook head‘ dimension.

-

GPR1200 mAP (shown as mAP): mean average precision across all categories

-

Landmarks, IMSketch, iNat, Instre, SOP, Faces: categories of images found within the GPR1200 dataset. Each value is the mAP value for the relevant category.

Results

We evaluate our method’s mAP score on the GPR1200 benchmark task, testing the effect of various k-grams parameters, as well as VQ-ResNet parameters.

| Model Name | Heads | Codebook Dim | Codebook Size | Commitment Weight | Threshold EMA Dead Code | Dim | ResNet Type | |

| VQ-ResNet50 | 8 | 256 | 128 | 0.0 | 2 | 2048 | 50 | |

| VQ-FrozenResNet34 | 8 | 128 | 256 | 5.0 | 1 | 512 | 34 | |

| SimilarityMeasurement | Model | Resolution | Kernel | Padding | should_channel_kgrams | mAP | Landmarks | IMSketch | iNat | Instre | SOP | Faces | |

| KGramTF-IDF | VQ-ResNet50 | 512 | 4 | 0 | False | 0.358996 | 0.58213 | 0.27893 | 0.24168 | 0.28393 | 0.60545 | 0.16185 | |

| KGramTF-IDF | VQ-ResNet50 | 512 | 5 | 1 | False | 0.358116 | 0.58626 | 0.27578 | 0.23728 | 0.27722 | 0.61037 | 0.16180 | |

| KGramTF-IDF | VQ-ResNet50 | 512 | 3 | 0 | False | 0.356071 | 0.58348 | 0.27313 | 0.23403 | 0.27203 | 0.61197 | 0.16178 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 512 | 4 | 0 | False | 0.347656 | 0.54185 | 0.26551 | 0.21882 | 0.27872 | 0.62476 | 0.15628 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 512 | 5 | 1 | False | 0.347565 | 0.54985 | 0.26217 | 0.21274 | 0.27008 | 0.63362 | 0.15695 | |

| KGramTF-IDF | VQ-ResNet50 | 614 | 4 | 0 | False | 0.347015 | 0.58669 | 0.25582 | 0.21834 | 0.26909 | 0.59012 | 0.16203 | |

| KGramTF-IDF | VQ-ResNet50 | 614 | 5 | 1 | False | 0.345833 | 0.58998 | 0.25283 | 0.21417 | 0.26241 | 0.59373 | 0.16187 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 512 | 3 | 0 | False | 0.344792 | 0.54615 | 0.25851 | 0.21024 | 0.26400 | 0.63323 | 0.15663 | |

| KGramTF-IDF | VQ-ResNet50 | 614 | 3 | 0 | False | 0.343568 | 0.58732 | 0.24950 | 0.21074 | 0.25619 | 0.59582 | 0.16184 | |

| KGramTF-IDF | VQ-ResNet50 | 256 | 4 | 2 | False | 0.339198 | 0.47780 | 0.35360 | 0.24893 | 0.24240 | 0.56072 | 0.15174 | |

| KGramTF-IDF | VQ-ResNet50 | 256 | 3 | 1 | False | 0.339197 | 0.47687 | 0.35379 | 0.24947 | 0.24325 | 0.55978 | 0.15204 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 614 | 4 | 0 | False | 0.338142 | 0.55188 | 0.24478 | 0.19829 | 0.26012 | 0.61759 | 0.15620 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 614 | 5 | 1 | False | 0.337825 | 0.55749 | 0.24103 | 0.19377 | 0.25305 | 0.62484 | 0.15677 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 614 | 3 | 0 | False | 0.335068 | 0.55416 | 0.23722 | 0.19122 | 0.24681 | 0.62444 | 0.15656 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 512 | 5 | 1 | True | 0.330183 | 0.52225 | 0.24846 | 0.21264 | 0.25831 | 0.59082 | 0.14862 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 512 | 4 | 0 | True | 0.326344 | 0.50732 | 0.24662 | 0.21861 | 0.26113 | 0.57731 | 0.14708 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 512 | 3 | 0 | True | 0.323291 | 0.51431 | 0.24105 | 0.20902 | 0.24815 | 0.58027 | 0.14695 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 614 | 5 | 1 | True | 0.317817 | 0.53150 | 0.22737 | 0.19314 | 0.23635 | 0.57025 | 0.14828 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 256 | 4 | 2 | False | 0.315947 | 0.42983 | 0.29231 | 0.21948 | 0.24301 | 0.56028 | 0.15077 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 256 | 3 | 1 | False | 0.315619 | 0.42757 | 0.29264 | 0.22086 | 0.24486 | 0.55697 | 0.15081 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 614 | 4 | 0 | True | 0.314760 | 0.51752 | 0.22639 | 0.19760 | 0.23944 | 0.56070 | 0.14691 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 614 | 3 | 0 | True | 0.310504 | 0.52159 | 0.22073 | 0.18945 | 0.22604 | 0.55865 | 0.14656 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 256 | 4 | 2 | True | 0.304981 | 0.41032 | 0.28407 | 0.21846 | 0.23638 | 0.53534 | 0.14531 | |

| KGramTF-IDF | VQ-FrozenResNet34 | 256 | 3 | 1 | True | 0.304241 | 0.40883 | 0.28296 | 0.21938 | 0.23723 | 0.53142 | 0.14563 | |

| KGramTF-IDF | VQ-ResNet50 | 512 | 4 | 0 | True | 0.296027 | 0.49294 | 0.21070 | 0.22187 | 0.21419 | 0.48399 | 0.15247 | |

| KGramTF-IDF | VQ-ResNet50 | 512 | 5 | 1 | True | 0.295039 | 0.49761 | 0.20775 | 0.21758 | 0.20830 | 0.48800 | 0.15100 | |

| KGramTF-IDF | VQ-ResNet50 | 512 | 3 | 0 | True | 0.286522 | 0.48304 | 0.19891 | 0.21354 | 0.19940 | 0.47538 | 0.14887 | |

| KGramTF-IDF | VQ-ResNet50 | 614 | 4 | 0 | True | 0.286102 | 0.49331 | 0.19496 | 0.20189 | 0.20796 | 0.46663 | 0.15187 | |

| KGramTF-IDF | VQ-ResNet50 | 256 | 3 | 1 | True | 0.285037 | 0.41862 | 0.26744 | 0.22793 | 0.18756 | 0.46522 | 0.14345 | |

| KGramTF-IDF | VQ-ResNet50 | 614 | 5 | 1 | True | 0.285007 | 0.49790 | 0.19262 | 0.19820 | 0.20234 | 0.46900 | 0.14998 | |

| KGramTF-IDF | VQ-ResNet50 | 256 | 4 | 2 | True | 0.283205 | 0.41683 | 0.26473 | 0.22735 | 0.18539 | 0.46198 | 0.14294 | |

| KGramTF-IDF | VQ-ResNet50 | 614 | 3 | 0 | True | 0.276272 | 0.48147 | 0.18505 | 0.19359 | 0.19382 | 0.45562 | 0.14808 | |

| CropAndJaccard | VQ-FrozenResNet34 | 614 | NAN | NAN | NAN | 0.261098 | 0.38146 | 0.18768 | 0.18802 | 0.23603 | 0.42209 | 0.15130 | |

| CropAndJaccard | VQ-ResNet50 | 614 | NAN | NAN | NAN | 0.216076 | 0.31513 | 0.15473 | 0.18724 | 0.18264 | 0.30936 | 0.14735 | |

| CropAndJaccard | VQ-FrozenResNet34 | 512 | NAN | NAN | NAN | 0.209238 | 0.32666 | 0.13962 | 0.14898 | 0.15798 | 0.33274 | 0.14945 | |

| CropAndJaccard | VQ-FrozenResNet34 | 256 | NAN | NAN | NAN | 0.196921 | 0.30610 | 0.13622 | 0.14287 | 0.14431 | 0.30527 | 0.14675 | |

| CropAndJaccard | VQ-ResNet50 | 512 | NAN | NAN | NAN | 0.165012 | 0.21407 | 0.12580 | 0.14234 | 0.12543 | 0.23798 | 0.14446 | |

| CropAndJaccard | VQ-ResNet50 | 256 | NAN | NAN | NAN | 0.154992 | 0.19104 | 0.12308 | 0.13314 | 0.11553 | 0.22524 | 0.14192 | |

Discussion

Our image retrieval system retrieves relevant images across the GPR1200 dataset, with reasonable mAP values for different kernel sizes and similarity measures. 3D-Kgrams usually performed much worse than 2d-kgrams. Raising the input image resolution to 614 did not increase mAP scores. Increasing the kernel size past 4, did not improve scores on our best VQ-ResNet50 model. This method still needs more refinement, but overall we recommend finetuning larger, more accurate VQ-ResNet models for obtaining the tokens, and then using 2D k-grams, with a kernel size of 3.

The choice of similarity measure and kernel size significantly impact the retrieval performance; in general, the BoW and TF-IDF approach provides better results than the Jaccard similarity measure. This shows that multi dimensional K-Grams extracted meaningful information from discretized tokens from our VQ-Resnet.

The authors of GPR 1200 obtained a mAP score of 42.8 % using kNN from the output of the last activation of a ResNet101 V2 [7]. They obtain higher scores using models with BiT pretraining [3]. Our score from our best configuration is 6 % worse. With pretraining of our VQ-ResNet on ImageNet 21k, we may be able to improve our scores somewhat. Generally, this reduction in score may be acceptable; our indexed features can be inputted into traditional text search engines.

Conclusion

In this data mining project, we aimed to develop an efficient and accurate image retrieval system for large-scale datasets by combining deep learning models with traditional data mining techniques. We chose the diverse GPR1200 dataset and employed a VQ-ResNet model along with Kgrams for image preprocessing. Our approach integrated image preprocessing, Bag-of-Words representations, and various similarity calculation methods.

We compared different methods, such as Jaccard Similarity and TF-IDF, and assessed the performance using the Mean Average Precision (mAP) metric. Our results revealed that the VQ-ResNet model, combined with preprocessing, Bag-of-Words representation, and TF-IDF similarity measure, delivered a robust image retrieval system.

References

- [1]

-

Yue Cao et al. “Deep Quantization Network for Efficient Image Retrieval”. In: Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence. AAAI’16. Phoenix, Arizona: AAAI Press, 2016, pp. 3457–3463.

- [2]

-

Shiv Ram Dubey. “A Decade Survey of Content Based Image Retrieval using Deep Learning”. In: CoRR abs/2012.00641 (2020). arXiv: 2012.00641. url: https://arxiv.org/abs/2012.00641.

- [3]

-

Alexander Kolesnikov et al. Big Transfer (BiT): General Visual Representation Learning. 2020. arXiv: 1912.11370 [cs.CV].

- [4]

-

Xiaobin Liu et al. E2BoWs: An End-to-End Bag-of-Words Model via Deep Convolutional Neural Network. 2017. arXiv: 1709.05903 [cs.CV].

- [5]

-

Rayhane Mama et al. NWT: Towards natural audio-to-video generation with representation learning. 2021. arXiv: 2106.04283 [cs.SD].

- [6]

-

A�ron van den Oord, Oriol Vinyals, and Koray Kavukcuoglu. “Neural Discrete Representation Learning”. In: CoRR abs/1711.00937 (2017). arXiv: 1711.00937. url: http://arxiv.org/abs/1711.00937.

- [7]

-

Konstantin Schall et al. “GPR1200: A Benchmark for General-Purpose Content-Based Image Retrieval”. In: CoRR abs/2111.13122 (2021). arXiv: 2111.13122. url: https://arxiv.org/abs/2111.13122.

- [8]

-

Wengang Zhou, Houqiang Li, and Qi Tian. “Recent Advance in Content-based Image Retrieval: A Literature Survey”. In: CoRR abs/1706.06064 (2017). arXiv: 1706 . 06064. url: http://arxiv.org/abs/1706.06064.